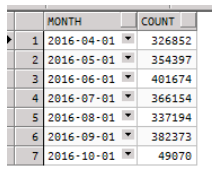

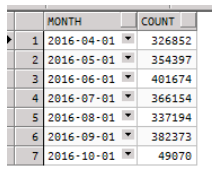

Consider a table, LOG_DATA, with lots of rows:

select trunc( LOG_TIME, 'MM' ) as MONTH, count(1) as Count

from Log_Data

group by trunc(LOG_TIME,'MM')

order by trunc(LOG_TIME,'MM');

Perhaps this would be better served by a table with RANGE partitioning, using the INTERVAL syntax of 11g to automatically create partitions as data accumulates?

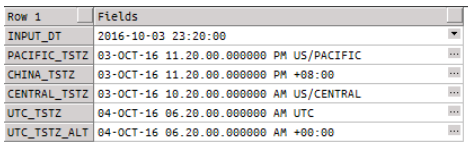

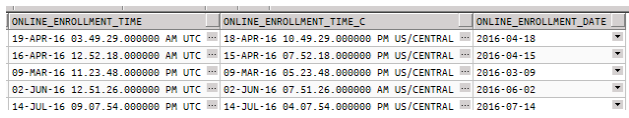

Unfortunately, LOG_TIME is data type timestamp with time zone, and we can’t create an interval partition on timestamp columns (yet!)

Also, we can’t use an expression in the interval definition. So, we need a DATE column:

create table Log_Data_P

(

ID integer,

PARENT_ID integer,

LOG_TIME timestamp with time zone default systimestamp,

STACK_LEVEL integer,

PACKAGE_NAME varchar2(30),

PROCEDURE_NAME varchar2(30),

LOG_LABEL varchar2(100),

LOG_VALUE varchar2(1000),

LOG_DURATION number,

PROC_DURATION number,

STEP_DURATION number,

LOG_CLOSED integer,LOG_DATE date default sysdate

)

partition by range ( LOG_DATE )

interval ( numtoyminterval( 1, 'MONTH' ) )

(

partition part_01 values less than ( date '2016-05-01' )

);

Here, we have added a LOG_DATE column to the end of the table structure. I’ve given it a default value and checked the code that writes to the table, to ensure there are no conflicts (there aren’t).

Now let’s insert into the new table, with the rows from the original (spanning several months):

insert into Log_Data_P

select

ID, PARENT_ID, LOG_TIME, STACK_LEVEL, PACKAGE_NAME, PROCEDURE_NAME, LOG_LABEL, LOG_VALUE,

LOG_DURATION, PROC_DURATION, STEP_DURATION, LOG_CLOSED, cast( LOG_TIME as DATE )

from Log_Data;

commit;

No errors… let’s see what partitions we have, at the end of this process:

select PARTITION_NAME, INTERVAL, HIGH_VALUE

from DBA_Tab_Partitions

where TABLE_NAME = 'LOG_DATA_P'

order by PARTITION_POSITION;

PARTITION_NAME INTERVAL HIGH_VALUE

------------------ ---------- -----------------------------------------------------------

PART_01 NO TO_DATE(' 2016-05-01 00:00:00', 'SYYYY-MM-DD HH24:MI:SS' )

SYS_P15277 YES TO_DATE(' 2016-06-01 00:00:00', 'SYYYY-MM-DD HH24:MI:SS' )

SYS_P15278 YES TO_DATE(' 2016-07-01 00:00:00', 'SYYYY-MM-DD HH24:MI:SS' )

SYS_P15280 YES TO_DATE(' 2016-08-01 00:00:00', 'SYYYY-MM-DD HH24:MI:SS' )

SYS_P15281 YES TO_DATE(' 2016-09-01 00:00:00', 'SYYYY-MM-DD HH24:MI:SS' )

SYS_P15279 YES TO_DATE(' 2016-10-01 00:00:00', 'SYYYY-MM-DD HH24:MI:SS' )

SYS_P15282 YES TO_DATE(' 2016-11-01 00:00:00', 'SYYYY-MM-DD HH24:MI:SS' )

7 rows selected.

Interesting… we have 6 new partitions over and above the original “PART_01” partition that we created. Obviously, Oracle has allocated some automatically-generated names to the partitions.

OK, so now the time has come to perform some pruning on our log table. Let’s delete the oldest partition:

SQL> alter table Log_Data_P drop partition PART_01;

ORA-14758: Last partition in the range section cannot be dropped

Uh-oh. It’s not as simple as we’d hoped. More about dropping partitions in this excellent post here:

Follow up #1 – OK, so try something sneaky, and drop the second-to-last partition

I had an idea. What if we define the table with an artificially small initial partition, with a start date that is earlier than any data in the table?

If we take a look at our Log_Data table today:

SQL> select count(1), min(LOG_TIME) from Log_Data;

COUNT(1) MIN(LOG_TIME)

---------- -----------------------------------

1172660 12-OCT-16 10.57.17.516528 PM -05:00

1.1 million rows, since October 12.

Now let’s define the Log_Data_P table again, with a start date of 2016-05-01 as described above, and insert the rows from our Log_Data table.

What do the partitions look like now?

select PARTITION_NAME, INTERVAL, HIGH_VALUE

from DBA_Tab_Partitions

where TABLE_NAME = 'LOG_DATA_P'

order by PARTITION_POSITION;

PARTITION_NAME INTERVAL HIGH_VALUE

---------------- -------- -------------------------------------

PART_01 NO TO_DATE(' 2016-05-01 00:00:00', ...

SYS_P17402 YES TO_DATE(' 2016-11-01 00:00:00', ...

SYS_P17403 YES TO_DATE(' 2016-12-01 00:00:00', ...

SYS_P17404 YES TO_DATE(' 2017-01-01 00:00:00', ...

“PART_01” won’t have any rows in it, but it defines the interval for future partitions. Now imagine some time goes by, and we don’t want the oldest month of data… so, let’s drop October, ‘SYS_P17402’:

SQL> alter table Log_Data_P drop partition SYS_P17402;

Table altered

select PARTITION_NAME, INTERVAL, HIGH_VALUE

from DBA_Tab_Partitions

where TABLE_NAME = 'LOG_DATA_P'

order by PARTITION_POSITION;

PARTITION_NAME INTERVAL HIGH_VALUE

---------------- -------- ------------------------------------

PART_01 NO TO_DATE(' 2016-05-01 00:00:00', ...

SYS_P17403 YES TO_DATE(' 2016-12-01 00:00:00', ...

SYS_P17404 YES TO_DATE(' 2017-01-01 00:00:00', ...

What’s the earliest row in the table?

SQL> select count(1), min(LOG_TIME) from Log_Data_P;

COUNT(1) MIN(LOG_TIME)

---------- -----------------------------------

859906 01-NOV-16 12.00.02.397712 AM -05:00

Success!

Now we just need a way to calculate the name of the partition based on, say, SYSDATE-180 (or whatever our aging specification is) and we’re golden.

Recent Comments