It’s time to take another look at some date/time conversion techniques…

Consider reading a DATE value from some source system, in a known time zone, and needing to convert it into some other time zone before saving into the destination table:

with

src_date as (

select

-- ---------------------------------------------------------------------

-- Here's a date value from the source system. From the value itself

-- we can't tell anything significant about it:

-- ---------------------------------------------------------------------

to_date('2016-10-03 23:20','yyyy-mm-dd hh24:mi') as INPUT_DT

from dual

),

assume_data as (

select

INPUT_DT,

-- --------------------------------------------------------------------------

-- If we know the value is a US/Pacific time zone value, we can declare that:

-- --------------------------------------------------------------------------

from_tz( cast( INPUT_DT as TIMESTAMP) , 'US/Pacific' ) as PACIFIC_TSTZ,

-- --------------------------------------------------------------------------

-- Alternatively, what if we know it is in China Standard Time ?

-- --------------------------------------------------------------------------

from_tz( cast( INPUT_DT as TIMESTAMP) , '+8:00' ) as CHINA_TSTZ

from src_date

)

select

-- ----------------------------------------------------------------

-- Now we have values that declare what time zone they belong to,

-- we can easily transform the value into datetimes for a different time zone:

-- ----------------------------------------------------------------

INPUT_DT,

PACIFIC_TSTZ,

CHINA_TSTZ,

-- ----------------------------------------------------------------

-- convert the China Standard Time value into US/Central:

-- ----------------------------------------------------------------

CHINA_TSTZ at time zone 'US/Central' as CENTRAL_TSTZ ,

-- ----------------------------------------------------------------

-- convert the US/Pacific time into UTC:

-- ----------------------------------------------------------------

PACIFIC_TSTZ at time zone 'UTC' as UTC_TSTZ,

PACIFIC_TSTZ at time zone '+0:00' as UTC_TSTZ_ALT

from assume_data;

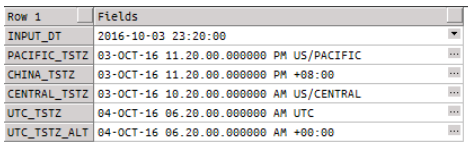

Results:

For example, when loading DATE values from C2R (Pacific time) and storing them in EDW (UTC) we could use the following expression:

cast( from_tz(cast( INPUT_DT as TIMESTAMP), 'US/Pacific') at time zone 'UTC' as DATE )

That is, if we really needed a DATE data type. I believe Oracle DB would handle the outer cast automatically if we attempted to save the TIMESTAMP+TZ value into a destination table’s DATE column

For more fun:

Recent Comments