A very nice article here, for those of you with an Oracle account:

Thanks to my colleague Bruce for that one.

Areas of Interest, as counted by my cat

A very nice article here, for those of you with an Oracle account:

Thanks to my colleague Bruce for that one.

Reference:

I’m not sure these instructions are correct. Investigating further…

The correct RegEdit node appears to be

HKEY_CURRENT_USER \ Software \ Microsoft \ Windows Live \ Writer \ Weblogs \ {GUID} \ UserOptionOverrides

The next question is, does it actually work? It appears to. At least, it adds the Tags box to the UI:

Update: Well, it looks like it would work, but it doesn’t seem to persist. The tag box is no longer visible in my instance of LiveWriter.

You will need:

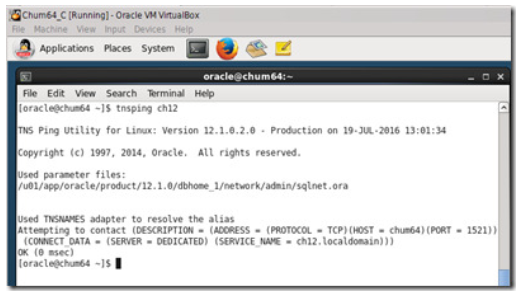

We could point at the Production system, but this is not a great idea, especially when you’re testing. I use a local virtual machine (running Oracle Linux 6.6) with an instance of Oracle Database 12c. This is where I do all my test builds, pulled from the latest branch in source control.

I’m going to assume you’ve got this covered… otherwise, this is a good starting reference:

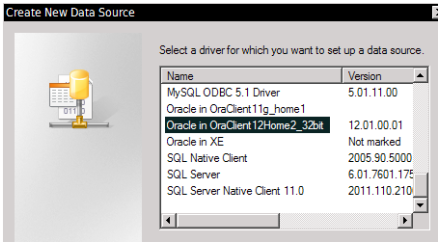

(We could try using the default Microsoft ODBC Driver for Oracle, but I’ve never got it to work.)

My local operating system is 64-bit Windows. However, my installation of Enterprise Architect is 32-bit. This means that it will use the 32-bot ODBC components, and I need to use the 32-bit ODBC Data Source Administrator to configure a data source (DSN).

And if Life weren’t complicated enough:

But, the bottom line is:

On a 64bit machine when you run “ODBC Data Source Administrator” and created an ODBC DSN, actually you are creating an ODBC DSN which can be reachable by 64 bit applications only.

But what if you need to run your 32bit application on a 64 bit machine ? The answer is simple, you’ll need to run the 32bit version of “odbcad32.exe” by running “c:\Windows\SysWOW64\odbcad32.exe” from Start/Run menu and create your ODBC DSN with this tool.

Got that?

It’s mind-boggling. The UI looks identical too.

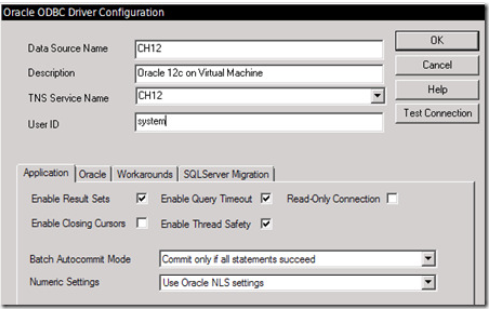

We’re not finished. We need to enter some details:

The only critical parameter here is the TNS Service Name which needs to match whatever you’ve set up in the TNSNAMES.ORA config file, for your target DB instance.

Here, I’ve used a user name of SYSTEM because this is my test Oracle instance. Also, it will allow me to read from any schema hosted by the DB, which means I can use the DSN for any test schema I build on the instance.

Now that the DSN is created, we can move on to working in Enterprise Architect.

Note: I’m using images from a Company-Internal How-To guide that I authored. I feel the need to mask out some of the details. Alas I am not in a position to re-create the images from scratch. I debated omitting the images entirely but that might get confusing.

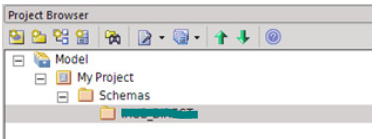

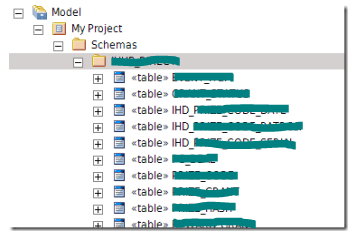

In Enterprise Architect, we have a clean, empty project, and we didn’t use Wizards to create template objects. It is really just a simple folder hierarchy:

Right-click on the folder and select from the cascading drop-down menu of options:

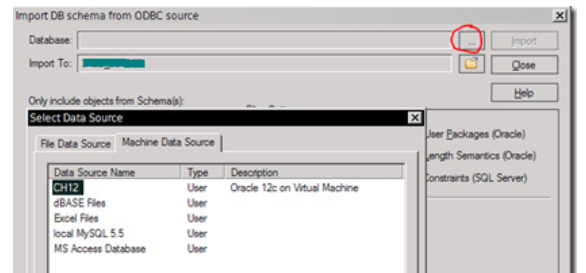

This will bring up the Import DB schema from ODBC source dialog.

Click on the chooser button on the right side of the “Database” field to bring up the ODBC Data Source chooser:

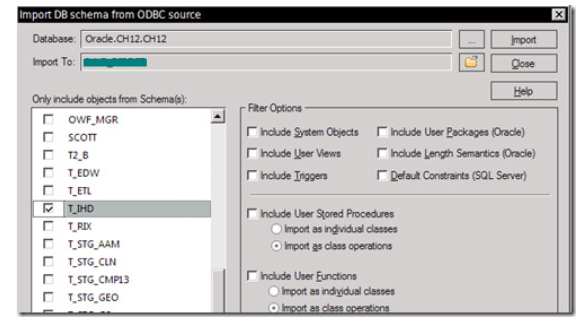

The DSN we created should be available under the Machine Data Source tab. Select it, and click OK. We should be prompted to enter a password for our pre-entered User Name, after which Enterprise Architect will show us all the schemas to which we have access on the DB:

Previously, on this instance, I ran the database build scripts and created a set of test schemas using the T_ name prefix.

We’re going to import the contents of the T_IHD schema into our project, so we check the “T_IHD” schema name.

Our intention is to import (create) elements under the package folder, for each table in the T_IHD schema.

Review the filter options carefully!

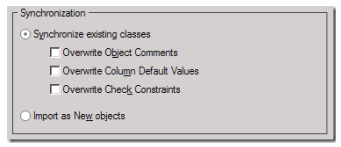

The default settings are probably correct, if we are only interested in creating elements for each table. Note the Synchronization options:

The package folder is empty, so you might think that we need to change this to “Import as New objects”. Don’t worry. New objects will be created if they don’t already exist.

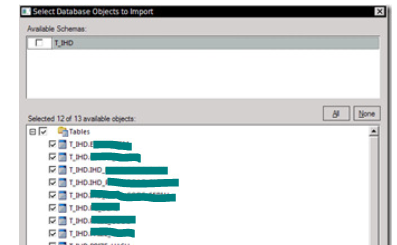

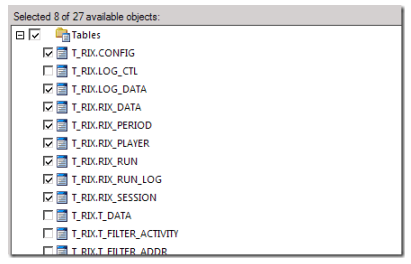

Now click on “Import” button at the top right of the dialog. After a sort wait, during which EA is retrieving metadata from the database, the contents of the T_IHD schema will be presented to us in the “Select Database Objects to Import” dialog:

We are only interested in the tables, so check the [x] Tables checkbox and all the contained tables will be selected.

Often there are objects we’re not interested in including in the Data Model. For example, the TEMP_EVENT table might only be used as an interim location for data, during some business process, and not worth complicating the model with.

We can clear the checkbox next to the TEMP_EVENT table name to skip it.

Now, having validated our selection, we can press the OK button to start the import.

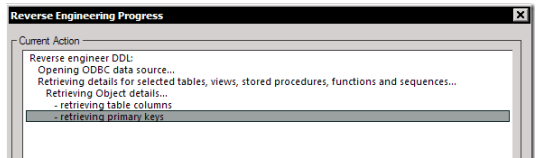

The process may take some time… when it completes, we can close the Import dialog, or select another schema and destination folder and import a different schema.

The table elements should now be visible in the Project hierarchy:

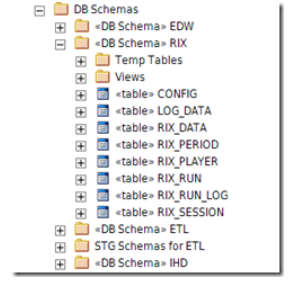

We need to refresh the RIX schema tables in the model. This schema is already in the model, with lots of additional element and attribute-specific notes that we need to retain.

Two important things to note:

We need to refresh the schema in the model, with the new structure from the DB. The process to follow is almost exactly the same as the clean import described earlier:

Select the package folder

Now, in this example we are only interested in refreshing those tables that are already in the model, in the current folder. That represents a subset of the total set of tables that are in the current DB schema:

In the image above, LOG_CTL is a table we don’t care about (not in the model); and the T_* tables are temporary tables that, in the model, are in a different folder. We’ll do those next (see below).

After the import process is completed, we should be able to drill down and see the schema changes reflected in the updated model.

We can repeat the process for the Temp Tables sub-package, this time selecting only the T_* temporary tables in the import selection.

Summary:

With careful set-up, it is possible to import and refresh table structures from database schemas into a project in Enterprise Architect, without over-writing existing documentation and attribute notes.

Some very nice articles, for reference:

Oops, Jamie Thomson seems to have vanished from the web. However, his posts are still available via the Wayback Machine:

I had some doubts that I was seeing all the changes from all developers, so I did some testing.

(I ran a git log command after each step to see what changes would show up.)

Step 1: Add a new file, and commit locally:

Mon Jul 11 21:05:41 2016 - Colin Nicholls : (Testing) Added a file locally

A misc_scripts/Testing_git_log.txt

Step 2: Pull from remote to refresh locally

Step 3: Push my local changes to the remote (origin).

Step 4: Edit the file, and commit. I misunderstood the use of the [x] Amend Last commit checkbox…

Mon Jul 11 21:10:28 2016 - Colin Nicholls : (Testing) Editing a file locally

A misc_scripts/Testing_git_log.txt

Note that the time-stamp has changed, and the comment text, but it is the same “Add” operation.

Step 5: Edit the file again, and commit. (This time, I did not use the [ ] Amend last commit, and I commented thusly:

Mon Jul 11 21:10:28 2016 - Colin Nicholls : (Testing) Editing a file locally

A misc_scripts/Testing_git_log.txt

Mon Jul 11 21:12:43 2016 - Colin Nicholls : (testing) [ ] Amend last commit (did not check)

M misc_scripts/Testing_git_log.txt

Step 6: Do a push to remote repository:

git.exe push --progress "origin" master:master

To https://github.abacab.com/zyxx/zyxx-db.git

! [rejected] master -> master (non-fast-forward)

error: failed to push some refs to 'https://github.abacab.com/zyxx/zyxx-db.git'

hint: Updates were rejected because the tip of your current branch is behind

hint: its remote counterpart. Integrate the remote changes (e.g.

hint: 'git pull ...') before pushing again.

hint: See the 'Note about fast-forwards' in 'git push --help' for details.

git did not exit cleanly (exit code 1) (1981 ms @ 7/11/2016 13:14:39)

OK, I don’t understand why “the tip of my current branch is behind its remote counterpart”, but it is telling me I should do another pull before pushing, so:

Step 7: Pull from the remote to update locally:

git.exe pull --progress --no-rebase -v "origin"

From https://github.abacab.com/zyxx/zyxx-db

= [up to date] master -> origin/master

= [up to date] cmp13_convert -> origin/cmp13_convert

= [up to date] dev_2015_02_A -> origin/dev_2015_02_A

= [up to date] dyson -> origin/dyson

= [up to date] edison -> origin/edison

= [up to date] fermi -> origin/fermi

= [up to date] gauss -> origin/gauss

= [up to date] grendel -> origin/grendel

= [up to date] hubble -> origin/hubble

= [up to date] prod_2015_04 -> origin/prod_2015_04

Auto-merging misc_scripts/Testing_git_log.txt

CONFLICT (add/add): Merge conflict in misc_scripts/Testing_git_log.txt

Automatic merge failed; fix conflicts and then commit the result.

git did not exit cleanly (exit code 1) (2231 ms @ 7/11/2016 13:15:42)

Now I’m in conflict with myself!?

Step 8: At this point, I resolved the conflict by selecting “mine” over “theirs”, and did another local commit. This time I got a special “merge commit” dialog that didn’t show any specific changed files, but clearly wanted to do something.

So what does the log say at this point?

Mon Jul 11 21:05:41 2016 - Colin Nicholls : (Testing) Added a file locally

A misc_scripts/Testing_git_log.txt

Mon Jul 11 21:10:28 2016 - Colin Nicholls : (Testing) Editing a file locally

A misc_scripts/Testing_git_log.txt

Mon Jul 11 21:12:43 2016 - Colin Nicholls : (testing) [ ] Amend last commit (did not check)

M misc_scripts/Testing_git_log.txt

Mon Jul 11 21:22:08 2016 - Colin Nicholls : Merge branch 'master' of https://github.abacab.com/zyxx/zyxx-db

Interesting:

Step 9: Pull; delete test file; commit; push

Mon Jul 11 21:05:41 2016 - Colin Nicholls : (Testing) Added a file locally

A misc_scripts/Testing_git_log.txt

Mon Jul 11 21:10:28 2016 - Colin Nicholls : (Testing) Editing a file locally

A misc_scripts/Testing_git_log.txt

Mon Jul 11 21:12:43 2016 - Colin Nicholls : (testing) [ ] Amend last commit (did not check)

M misc_scripts/Testing_git_log.txt

Mon Jul 11 21:22:08 2016 - Colin Nicholls : Merge branch 'master' of https://github.abacab.com/zyxx/zyxx-db

Mon Jul 11 21:35:21 2016 - Colin Nicholls : (testing) deleted file

D misc_scripts/Testing_git_log.txt

This needs further testing, perhaps, but I’m going to return to billable work at this point.

This is mostly for my own reference, so I don’t lose it.

C:> set path=C:\Programs\Git\bin;%PATH%

C:> D:

D:> cd /source_control/ABACAB/github/zyxx_db

D:> git log --name-status -10 > last_ten_updates.txt

So long as the current path is under the right repository directory, the git log command seems to pick up the right information, without being told what repository to interrogate.

The output is useful, but the default formatting isn’t ideal. I’m not so interested in the git-svn-id or commit id. There’s a comprehensive list of options…

Try:

git log --name-status --pretty=format:"%cd - %cn : %s" --date=iso

The results are close to what I’m used to with Subversion:

2016-06-25 21:54:13 -0700 - Colin Nicholls : Synchronizing with latest SVN version

M zyxx/db/trunk/DW/DIRECT_DW.pkb

M zyxx/db/trunk/DW/SAMPLE_DATA.pck

:

M zyxx/db/trunk/environments/PROD/db_build_UAT.config

M zyxx/db/trunk/environments/UAT/db_build.config

2016-06-02 17:41:59 +0000 - cnicholls : Re-run previous report asynch; clear STATUS_TEXT on re-run

M zyxx/db/trunk/RIX/RIX.pck

2016-06-01 23:05:29 +0000 - cnicholls : Added V_Rix_Run_Log

M zyxx/db/trunk/RIX/create_views.sql

2016-06-01 09:12:36 +0000 - fred : Prepare deployment script.

M zyxx/db/trunk/deployment_scripts/49/during_DW.sql

2016-06-01 02:27:39 +0000 - zeng : INH-1139: Offer Issue - DW Should handle the "Link" action for Tag. Fix bug.

M zyxx/db/trunk/DW/ABACAB_RI.pkb

What I don’t yet know is why the most recent change has a time zone of “-0700” and the others “-0000”. It may have something to do with the way the previous entries were imported. Notice the committer name is different in the most recent check-in, which was the first one I did from my working copy, after the initial import.

My current format of choice:

git log --name-status --pretty=format:"%cd - %cn : %s" --reverse --date-order --date=local

However, “local” doesn’t seem to mean “my local time zone”. So, not sure what the best date format is.

From

Essentially:

$ su

# usermod -a -G vboxsf user

This adds the user to the security group ‘vboxsf’ that has create/edit permissions.

We have a SQL query that accepts WHERE clause parameters of date range. 1 year, 2 years, all work predictably. When I use a very large date range (i.e. “everything”), the Oracle dedicated server process crashes.

From the trace file:

*** 2016-05-20 22:06:12.735

*** SESSION ID:(630.42649) 2016-05-20 22:06:12.735

*** CLIENT ID:() 2016-05-20 22:06:12.735

*** SERVICE NAME:(SYS$USERS) 2016-05-20 22:06:12.735

*** MODULE NAME:(rix) 2016-05-20 22:06:12.735

*** CLIENT DRIVER:() 2016-05-20 22:06:12.735

*** ACTION NAME:(prepare_series(a)) 2016-05-20 22:06:12.735

Block Checking: DBA = 8236992, Block Type = Unlimited undo segment header

ERROR: Undo Segment Header Corrupted. Error Code = 14508

ktu4shck: starting extent(0xffff8000) of txn slot #0x6b is invalid.

Searching Google brought up a Russian blog page, that seems to be citing exactly the same issue:

*** MODULE NAME:(e:AR:bes:oracle.apps.xla.accounting.extract) 2015-10-01 06:37:00.659 -- станд.модуль OEBS

...

Block Checking: DBA = 14597504, Block Type = Unlimited undo segment header -- проверка UNDO

ERROR: Undo Segment Header Corrupted. Error Code = 14508 -- падает со специфичной для ANALYZE ошибкой (***)

ktu4shck: starting extent(0xffff8000) of txn slot #0x21 is invalid.

valid value (0 - 0x8000)

The gist of the post seems to indicate that they are using Oracle 12.1.0.2 and had set TEMP_UNDO_ENABLED=TRUE (a new feature in 12c).

This is interesting because we set this parameter true also, in our code.

Google Translate failed to come through with a useful translation, but it could be that they set this parameter FALSE in order to resolve the problem.

Previously, our test failed after ~2 hours. So far, having made the change to the parameter, it is still running after 4 hours. So, we’re optimistic.

I’m not really a fan of OS X. I’m sure the underlying OS is fine – after all, It’s a Unix system. I know this! – but the Finder; the keyboard layout; the relentless updates; but most of all the fuzzy text aggravated me.

After some success with a Bootable USB drive containing a 64-bit Linux Mint ISO, I decided to follow Clem’s excellent instructions here:

http://community.linuxmint.com/tutorial/view/1643

and install Linux Mint 17.1 on my 2013 iMac.

Now, I’m not crazy: I resized the primary OS X partition and divided up the remaining space, just as Clem recommended:

/dev/sda4 30 GB ext4 /dev/sda5 4 GB swap /dev/sda6 500 GB ext4

After the Linux install completed, I also followed the “Fixing the boot order” instructions, about installing efibootmgr and making the EFI boot Linux first. Supposedly this was to achieve:

“The boot order should now indicate that it will run Mint first, and if that ever came to fail.. it would then run Mac OS. In other words our MacBook now boots into Grub. From there we can select Mint or press Escape and type “exit” to boot into Mac (we’ll fix the Mac grub entries to make it exit without having to type anything later on in this tutorial).”

I’m not sure what I did wrong… maybe nothing. Maybe we’re just expected to have a complete understanding of EFI and GRUB and bootloader configuration in general, at this point.

Anyway, bottom line: I never saw GRUB, or a menu of boot options, or anything. It just booted straight into Linux. Fortunately the Mac HD volume is visible and accessible under Linux, and I’ve been able to copy my files over as the need arises, from inside Linux Mint. Excellent.

But what if I want to execute a Mac OS X application natively, for some reason? Dammit, I want to dual boot this thing. The normal Option- key (Mac Boot menu) no longer works, and neither does Option-R (to get to the Mac Recovery partition).

After some research, I learned that pressing ‘c’ during the boot process gets us to the GRUB command line. Now I could use the ls -l command to list the partitions:

hd2,gpt1 fat efi

hd2,gpt2 hfsplus 'Mac HD'

hd2,gpt3 hfsplus 'Recovery HD'

hd2,gpt4 ext * 29 GiB

hd2,gpt5 - 5 GiB

hd2,gpt6 ext * 438 GiB

but this still left me with no understand of how to boot into one of them. More research:

http://askubuntu.com/questions/16042/how-to-get-to-the-grub-menu-at-boot-time

$ sudo update-grub

It suggests adding the following to the /etc/grub.d/40_custom script:

menuentry "OS X" {

insmod hfsplus

set root=(hd1,gpt2)

chainloader /System/Library/CoreServices/boot.efi

}

Long story short: that works perfectly. After sudo update-grub and a reboot, we have a menu and an option that will boot into OS X.

Thanks, Internet!

© 2024 More Than Four

Theme by Anders Noren — Up ↑

Recent Comments